The 4th meeting of the ALUIAR team was set up to finalise the storyboarding of the ideas suggested in previous meetings and to present the outcomes from the data gathering plus options for some functional solutions.

Those attending were Mike Wald(MW), Garry Wills (GW), Seb Skuse (SS), Yunjia Li (YL), Mary Gobbi (MG), Lisa Roberts (LR), and E.A. Draffan (EA)

Apologies

Apologies were received from Lester Gilbert, Lisa Harris and Debbie Thackray.

Mike opened the meeting with a discussion document related to the functionality issues discussed at the outset of the project and comments collected from initial interviews. Accepted ideas are in red.

- Greater flexibility of movement backwards or forwards through a recording (e.g. by typing in new time) as at present can only move in 5 second ‘nudges’ or move time slider or change speed if recording format and player allow.

Possible Solution(s)

a) Enter time into time entry box and player will move to that time

b) Change ‘nudge’ time from 5 seconds to 1 second

c) Add additional ‘nudge’ time of 1 second as well as existing 5 seconds

d) If in editor and transcript text is selected for the editor text box then move player time automatically to the start Synpoint time

- A drop-down box listing frequently used tags (e.g. for coding name of speaker and category code)

Possible Solution:

Implement drop-down box listing frequently used tags. E.g.

a) tags they have used on this recording

b) tags anyone has used on this recording

c) in alphabetic order

- foot pedal control of player

Possible Solution:

Find available foot pedal that works or allows pedals to be assigned to keyboard shortcuts – Research the issues – EA to contact Hagger about suitable foot pedals

- When manually transcribing a recording it is possible to also annotate this with the start time of the clip entered automatically but the end time needs to be manually entered. Synote allows a section of a created transcript to be selected and the annotation to be linked to that section with the start and end times of those sections to be automatically entered. It would make the system easier to use if it was possible to also do this without having first to save the transcript.

Possible Solution:

If there is text in the editor text box then when selecting create, automatically enter both the start and end Synpoint times into the Synmark start and end times

- Facility to download the annotation data (e.g. to Microsoft Excel for statistical analysis and charts and graphs or for a report or into other annotation tools). At the moment the information requires copying and pasting

Possible Solution:

Add csv export for Synmarks and Transcript to print preview

- Making it harder to exit without saving and so losing changes made.

Solution: Already done this in the current version

- Allowing the user to control the recording playback when annotating by providing media player controls in the annotation window. (at present a user can annotate a recording and the annotation can automatically read the time of the recording but the user cannot easily replay a section of the recording while writing the annotation)

Possible Solution:

Add the javascript player controls to the Synmark panel

- Redesign of interface to improve learnability

Possible Solution:

This is related to the current interface work and can be seen in the PowerPoint slide show below.

- Organise recordings into groups and categories to make them easier to find and manage

Possible Solution:

Add tags to the title field

If categories were to be used they would have to be hard coded and not all the categories would be suitable.

- Ability to replay just the video clip from a search (at present plays from the start time and manually have to pause at the end time of the clip)

Possible Solution:

Using linked multimedia fragments – not feasible in the time scale

Additional Issues NOT in original Proposal

xiii Users find it difficult to understand how to store and link to their recordings in their own web space

Possible Solution: (Yunjia is currently investigating this)

Allowing recordings to be uploaded into database rather than only being linked to in user’s own web based storage area

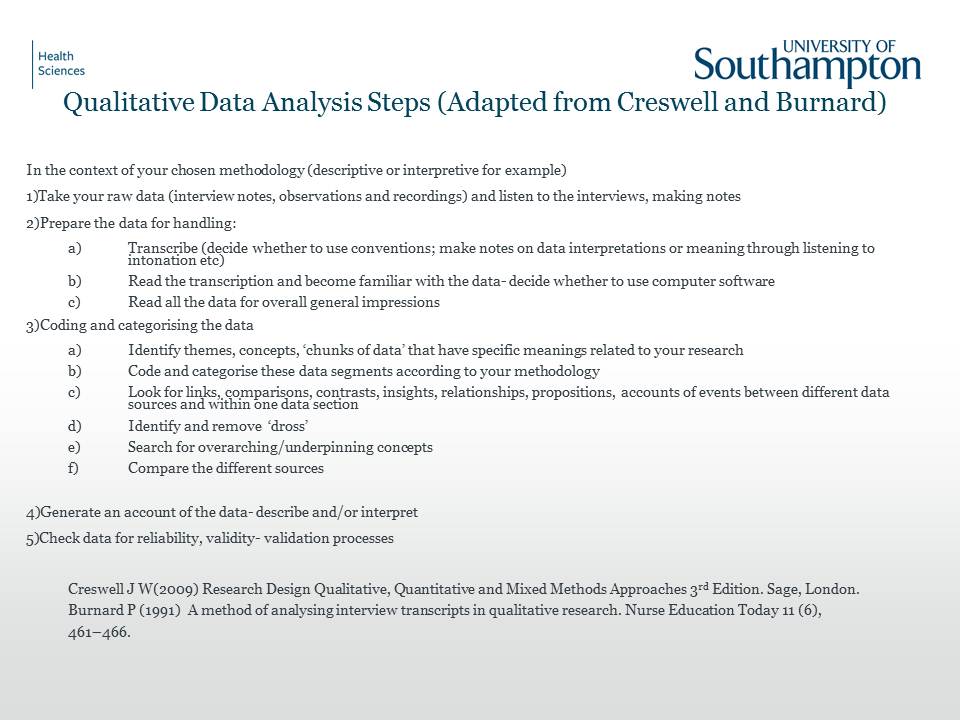

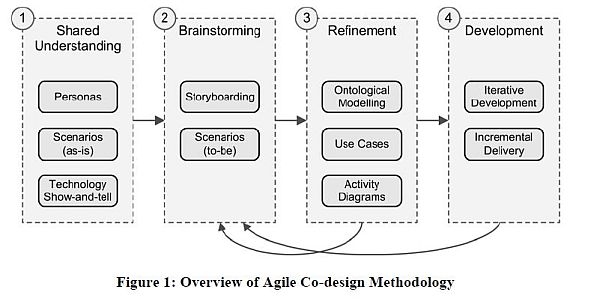

There then followed a presentation by Yunjia to show the work already carried out on the uploading of videos and audio recordings as well as changes that are happening to the interface. A discussion followed and the ideas were accepted. The website is not public at present but below are a series of slides to show how the system is changing.

There was no other business and possible dates for the next meeting have been added to the Doodle Calendar for October.

The next meeting will be held on October 13th from 2-3pm in the Access Grid Room, Building 32 level 3.

The next meeting will be held on October 13th from 2-3pm in the Access Grid Room, Building 32 level 3.