SS12: EU Code for a Cause 2014 is supported by Project Possibility at the ICCHP 2014 conference having links with eAccess+, AAATE and ICTpsp. The organisers state that the “aim is to inspire students, faculty and other stakeholders in Computer Science/Engineering and related fields to take up accessibility and Assistive Technology as a topic. It

- introduces the power and the need of eAccessibility to the next generation of software engineers

- brings students in contact with the eAccessiblity and AT field and in particular with end users

- contributes to easy and fast solutions of pressing AT and eAccessibility problems outlined and defined by users

- releases code as open source which facilitates an accelerated path towards products and services.

Thereby a long term impact on the uptake and implementation of accessibility in practice is expected.”

The University of Southampton team from Electonics and Computer Science Web and Internet Research Group have attempted to develop a Symbol Dragoman and presented the idea to the Project Possibility (SS12) judges on 7th July with a set of PowerPoint slides and demonstration.

According to Wikipedia “A dragoman was an interpreter, translator and official guide between Turkish, Arabic, and Persian-speaking countries and polities of the Middle East and European embassies, consulates, vice-consulates and trading posts. A dragoman had to have a knowledge of Arabic, Persian, Turkish, and European languages.”

Symbol Dragoman aims to allow someone who has no spoken language and uses pictograms or images to communicate in Arabic or English. To combine chosen ‘symbols’ in any way they want to produce a sentence that can be read or heard in both languages with the potential of offering any combination of languages in the future.

Learning Curve

In this case the team had to develop a basic understanding of Augmentative and Alternative Communication (AAC) where users were learning the language of symbols.

The issues that occur in a bi-lingual environment, such as Arabic or English?

Understanding whether words above or beneath the pictogram are helpful in multilingual situations.

Coping with single and multiword differences impacting on syntaxt and semantics

Dealing with situations where several concepts were available using one symbol or several symbols could make up concept?

It is often the case that the AAC user may not be able to read Symbol Dragoman although text to speech can offer some support but there needed to be the translation to help the carer or assistant who speaks another language.

Examples

‘put in’ possibly means ‘insert’ in Arabic – أدخل

This symbol also means ‘tidy up’ or نظف ويرتب

But now we have ‘put in a safe place’ could mean put in a ‘secure’ place. In Arabic وضعه في مكان آمن This literally means ‘he put him in a safe place’ as there is no ‘it’ in Arabic. The Arabic verb in its most basic form is third person masculine. It should be noted that in English we would also need a pronoun such as ‘it’ in this case. In Arabic the letter that represents ‘it’ or ‘him’ is part of the verb

The problem is that we need a system that not only translates the symbol into a word or multiword in both Arabic and English but also allows the user to build a sentence in symbols that can be recognised in both languages, where order is not important but the sentence is understandable with correct grammar.

Requirements

- A system that will allow Arabic symbol users to work in both languages with the symbols and words appearing from right to left for Arabic and left to right for English

- A system that works out what will possibly be the meaning of a collection of symbols chosen by the AAC user in any order

- Grammatically correct sentences / phrases presented in two languages

.

The Outcome

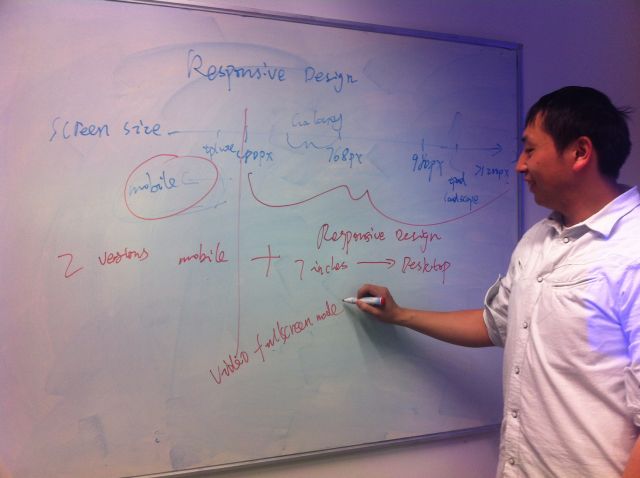

A open source web based application that has been designed using responsive methodologies allowing it to be used within a browser on a computer or tablet.

It has made use of creative commons symbols from ARASAAC and The Noun Project. These symbols have been categorised and present a sample set of words provided by young AAC users and their carers in Doha who are from Arabic speaking families but are often taught or have therapy with international specialists who do not speak Arabic.

The team have drawn on Natural Language Processing and machine learning to offer users a more speedy and flexible approach to symbol choices and sentence production. TATOEBA is being used as the source for sample sentences.

TATOEBA has a database with more than 3 million sentences in 179 different languages. Among them, 440 thousand sentences in English and 15 thousand in Arabic. The database saves the translation relationships of sentences in different languages in the form of sentence 1 in language 1 is the translation of sentence 2 in language 2, and those translations are created by users in the TATOEBA community. Currently, there are more than 6 million translation relationships in the database. The sentences have been downloaded along with the translation relationships between the sentences. These have been placed in an Elasticsearch (ES) server which has be wrapped with a RESTful API for the front end to query the sentences.

When symbols are selected from the user interface, the words and phrases that the symbols represent in a certain language are constructed as a query for ES server. The response is based on all the sentences in the ES server that contains all those words and phrases in that language that will be returned with any available translations for each sentence. The fact that the sentences are generated by a community that uses the word combinations in their first language, means that accuracy rates should be higher than machine translation.

However, there are many more sentences available in English compared to Arabic so some adjustments have had to be made. It was also found that the number of sentences that the person engaging with the AAC user should be reduced to allow for a dialogue to develop that is not just based on ‘yes/no’ responses.

Sample screen shots showing a choice of English and Arabic sentences

Code availability

The open source code is available on BitBucket

Instructions

The user is able to select a symbol from the web pages using the keyboard or mouse

A simple sentence can be built by individual symbol selection with the images appearing in the edit box above. Then using the menu buttons alterations to content and the language can be achieved

Symbols can be deleted one by one using the orange framed back arrow

Symbols can be deleted one by one using the orange framed back arrow

The entire phrase/sentence can be deleted using the red framed trash can

The entire phrase/sentence can be deleted using the red framed trash can

The symbols are changed into a choice of sentences by selecting the green framed rotor

The symbols are changed into a choice of sentences by selecting the green framed rotor

Should one of the categories on the right be chosen, the user returns to the home page using blue framed arrow

Should one of the categories on the right be chosen, the user returns to the home page using blue framed arrow

The book with open pages toggles access to second language – Arabic or English.

The book with open pages toggles access to second language – Arabic or English.

The Text to Speech in Arabic is activated by highlighting the text and using ATbar speech output if you do not have Arabic on your computer

The Text to Speech in Arabic is activated by highlighting the text and using ATbar speech output if you do not have Arabic on your computer

Keyboard and mouse accessibility include the ability to use tabbing, enter and several access keys for example numners for categories and initial letters for menu actions.

There is also the option to use the open source ATbar to provide further accessibility options such as coloured overlays and text to speech support in English and Arabic once a sentence has appeared.

Challenges Arising

Odd sentences that do not relate to the choices made – often where there are too many alternative words available.

Blank when no symbols relate to any sentences – often because too many symbols have been used in a random way.

Evaluation

At present it was felt by some testers that the accuracy rates were still a problem even though the grammar structures in both languages were often more correct when compared to word by word translation.

The system needed to be more intelligent in its choice of sentences so people’s names did not appear.

More core vocabulary options with an increased number of symbols may provide better rates of accuracy

Note

Several student groups took part in the Project Possibility SS12 competition and the well deserved winners produced a very polished application called PAVE that analysed PDF documents for their accessibility and guided users to make appropriate changes.