The final help files and videos are now in place.

The Synote Researcher transcript (.doc file) can be downloaded.

Help file (Word .doc download) This is also seen on the home page of Synote Researcher

The final help files and videos are now in place.

The Synote Researcher transcript (.doc file) can be downloaded.

Help file (Word .doc download) This is also seen on the home page of Synote Researcher

It has always been difficult to have a full team meeting and our last meeting was no different, but by using the University of Southampton’s iSurvey system we have managed to get results to some of our questions. Moreover, through the use of face to face interviews, emails, calls and the blog we have gained an insight as to how to develop an Adaptable and Learnable User Interface for Analysing Recordings. Synote, for the researcher, has been developed into a freely available open source downloadable application that can be used by any individual, institution or organisation.

We believe that we have succeeded in producing improvements to the ‘existing user interface’ and adapted Synote as an “e-research tool, in order to make [it] easier to learn by non-specialist users.” (JISC Usability and Adaptability of User Interfaces) by using a co-design methodology with a very open approach to the project evaluation. The final sample video to illustrate its use is being produced and will be made available.

The complete agenda for the last meeting was mentioned in the last blog, but under the main points I have also provided an overview of some of the ideas and comments made throughout the project and during the face to face interviews. There is a final summary available at the end of this blog.

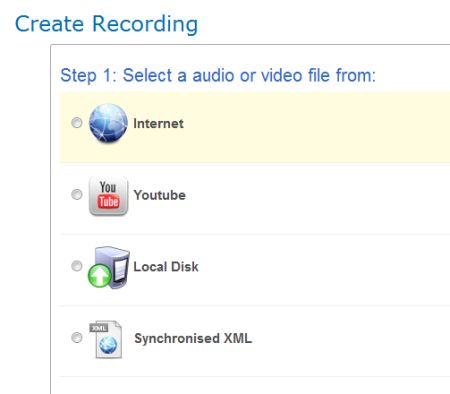

There was much discussion during the lifetime of the project about having to make a new version of Synote whilst leaving the original system intact. Synote was designed for the annotation of educational recordings with notes and transcriptions. The content has often been drawn from lectures, seminars, videos and audio available online. Recordings are not usually held on a specific server for Synote users. Users are required to use their own websites to provide the URL link to the Synote player.  During the project we discovered that some users found it hard to make a URL from an uploaded recording and so the new version includes the ability to upload a recording from a desktop computer, to link to YouTube recordings, as well as users’ own websites.

During the project we discovered that some users found it hard to make a URL from an uploaded recording and so the new version includes the ability to upload a recording from a desktop computer, to link to YouTube recordings, as well as users’ own websites.

This also led to the decision that the researcher version should be a download rather than held on the University of Southampton server for public use, as is the case with the original version. This decision was made because of the type of sensitive data that might be used by researchers. By having a download the software can be held on secure servers with increased data protection.

Finally, the fact that the new version had some enhanced features, meant that it needed an addition to its name! iSurvey was used for voting and although the name ‘Synote Scholar’ was liked by many members of the team – Mike was the final arbiter with the name Synote Researcher winning the day as he felt that the name ‘Synote Scholar’ could equally be seen to apply to the original version of Synote and so didn’t make it clear that the new version was designed for researchers!

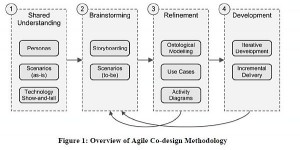

Millard, D., Faulds, S., Gilbert, L., Howard, Y., Sparks, D., Wills, G. and Zhang, P. (2008) Co-design for conceptual spaces: an agile design methodology for m-learning. In: IADIS International Conference Mobile Learning 2008.

Throughout the project we used the blog to act as part of the evaluation process – publicising storyboards, changes made and slide views of the changing Synote Researcher using the agile co-design methodology mentioned at the outset. This allowed us to have an on-going conversation with users and other team members. It resulted in an iterative approach being taken to the development process and was very useful when monitoring changes.

We used John Brooke’s System Usability Scale (SUS) for a quick analysis of the views of users as to the usability of Synote Researcher. The results showed that there was an increase in the overall scores out of 100 from 48.50 to 67 with 4 out of 5 users agreeing in the second evaluation, that they felt “most people would learn to use this system very quickly” The score that brought the second evaluation down was the need for technical support to set up the system. This is not surprising and the download may need technical support.

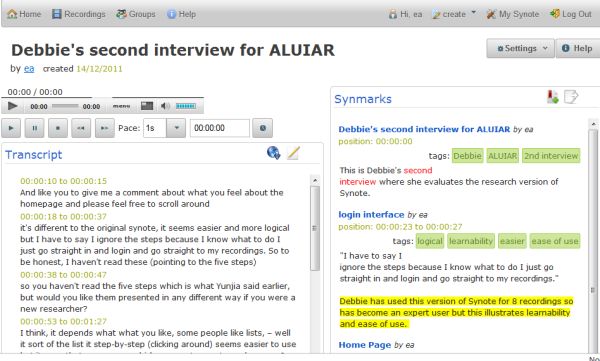

More precise evaluations were undertaken via a series of face to face interviews designed to see if the changes made had ensured that Synote was easier to learn and use by researchers wishing to analyse and code multimedia data. During the interviews users were asked to participate in practical exercises such as logging-in, searching for recordings, creating their own recordings and adding a transcript and synmarks or annotations. They were asked to comment throughout the meeting and offer a score of 1-5 for look and feel where 5 was excellent.

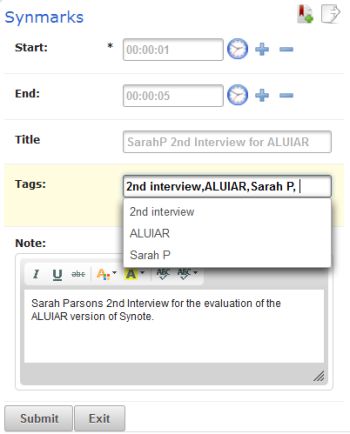

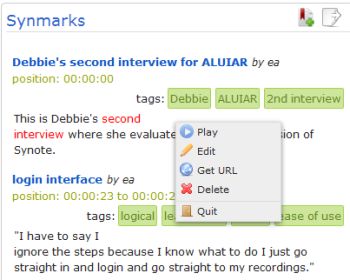

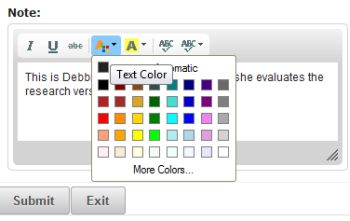

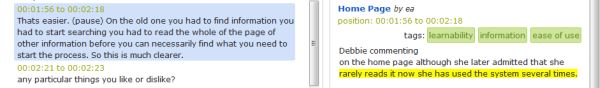

During the discussions background information about the possible use of the demonstrator was requested and how users views about the Synote service may have changed due to the redesign. The evaluation of the original Synote took place in June 2011 and the final appraisal occurred during November 2011. The interviews were also analysed using Synote Researcher with annotations and remarks as seen above.

During the discussions background information about the possible use of the demonstrator was requested and how users views about the Synote service may have changed due to the redesign. The evaluation of the original Synote took place in June 2011 and the final appraisal occurred during November 2011. The interviews were also analysed using Synote Researcher with annotations and remarks as seen above.

The results for scoring the individual pages were based on the users who completed both interviews although we had an additional three external users who commented on the new version. As there were some extra interviewees it was decided that an average of each score given for each page discussed would be taken over both interviews. As can be seen all the scores increased and this was despite ideas for further changes and constant iterations.

As a result of all the requests it was felt necessary to revisit the original work packages to check all the elements requested at the outset of the project had been discussed and included in the design. Only one of the requested changes was not possible to achieve in the time available as this would have involved a redesign of the whole Synote system.

Greater flexibility of movement backwards or forwards through a recording (e.g. by typing in new time) as at present can only move in 5 second ‘nudges’ or move time slider or change speed if recording format and player allow. – Now able to nudge the recording forwards and backwards by 1,5,10 and 20 seconds.

Greater flexibility of movement backwards or forwards through a recording (e.g. by typing in new time) as at present can only move in 5 second ‘nudges’ or move time slider or change speed if recording format and player allow. – Now able to nudge the recording forwards and backwards by 1,5,10 and 20 seconds.

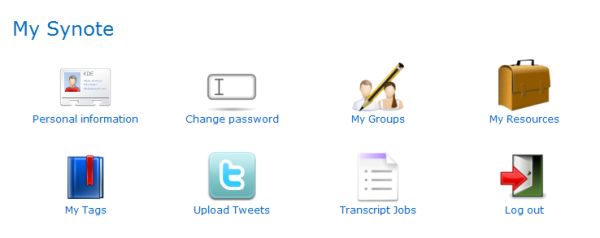

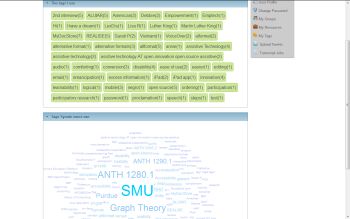

In addition to the changes made to many sections of the original Synote there has been the introduction of ‘MySynote’ – this is an area where the researcher can look at their own recordings, synmarks or annotations and tags used for coding as well as edit their profile. It allows for the sorting of recordings and the viewing of the most commonly used tags as a tag cloud.

In addition to the changes made to many sections of the original Synote there has been the introduction of ‘MySynote’ – this is an area where the researcher can look at their own recordings, synmarks or annotations and tags used for coding as well as edit their profile. It allows for the sorting of recordings and the viewing of the most commonly used tags as a tag cloud.

Both Yunjia and I were very heartened to hear the comments made by interviewees including external evaluators who had seen or used the original Synote, and were now being introduced to Synote Reseacher. Even those who were using the new version commented favourably on the improved usability and learnability. Dr Sarah Parsons commented ” It feels a lot more intuitive and I think with some of those tweeks we have talked about in terms of labelling clear what a couple of those bits are would strengthen that even further, it feels very accessible to me….” Audio clip

Debbie said about the home page “Thats easier. (pause) On the old one you had to find information you had to start searching you had to read the whole of the page of other information before you can necessarily find what you need to start the process. So this is much clearer.”

Debbie said about the home page “Thats easier. (pause) On the old one you had to find information you had to start searching you had to read the whole of the page of other information before you can necessarily find what you need to start the process. So this is much clearer.”

The final changes as a result of the last few interviews are minor ones listed below

Final Summary

We have been asked to

It is hoped that this final blog has covered all these requirements as it was hard to divide the list into three separate blogs without considerable repetition. We set out to achieve a version of Synote that would suit researchers and be easy to use and learn. We hope we have been successful as well as providing a unique application that can:

There is still the challenge of creating annotated clips from the recordings that automatically stop at the end of the clip – this was not possible to achieve in the time available as it requires a significant redesign of the Synote system

Rating our success through the use of various communication methods along with specific surveys plus interviews has provided us with a range of quantitative and qualitative data, despite the low number of users over the period of the project. The Nielsen Norman Group is often quoted as saying “The best results come from testing no more than 5 users and running as many small tests as you can afford.”

When it comes to learning lessons these can be summarised as follows: