Project Overview

The project will be led by Dr Mike Wald of Southampton’s School of Electronics and Computer Science (ECS) and his team will use an agile interactive approach involving end users in regular co-design, and review meetings. There will also be regular management meetings and discussions with the Stakeholder Group involving researchers from a wide range of schools/faculties (including Psychology, Health sciences, Nursing, Sociology and Social Policy, Management, Education).

The project will begin with an initial project face-to-face meeting. Team meetings will occur at monthly intervals to monitor progress against objectives. Minutes of these meetings will be published on this project blog and will be available to the public. Financial reports will be supplied by ECS financial management and a Final Report will be produced at the end of the Project as specified. There will be a final project closure meeting. Each of the work packages will require formal review and sign-off meetings. There will be weekly technical meetings of the core project staff.

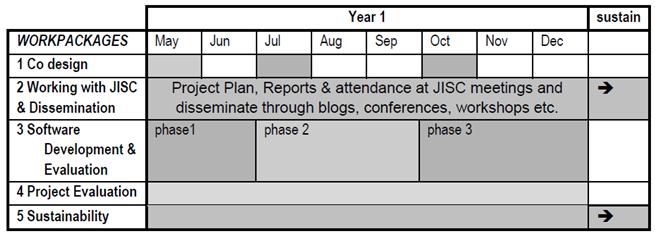

Gantt Chart

Workpackage 1: Co-design: Team meeting and requirements and specification agreement with our panel of stakeholders from different schools identified in sections 2.1 & 3.1 who will be involved in the co-design and evaluation processes. These users have already identified the following developments required to make the tool more adaptable and learnable and any further issues will also be identified:

- Greater flexibility of movement backwards or forwards through a recording (e.g. by typing in new time) as at present can only move in 5 second ‘nudges’ or move time slider or change speed if recording format and player allow.

- A drop-down box listing frequently used tags (e.g. for coding name of speaker and category code)

- Foot pedal control of player

- When manually transcribing a recording it is possible to also annotate this with the start time of the clip entered automatically but the end time needs to be manually entered. Synote allows a section of a created transcript to be selected and the annotation to be linked to that section with the start and end times of those sections to be automatically entered. It would make the system easier to use if it was possible to also do this without having first to save the transcript.

- Facility to download the annotation data (e.g. to Microsoft Excel for statistical analysis and charts and graphs or for a report or into other annotation tools). At the moment the information requires copying and pasting

- Making it harder to exit without saving and so losing changes made.

- Allowing the user to control the recording playback when annotating by providing media player controls in the annotation window. (at present a user can annotate a recording and the annotation can automatically read the time of the recording but the user cannot easily replay a section of the recording while writing the annotation)

- Use of text font, colour, size and style for coding

- Individual profiles provide a graduated learning curve to suit the preferences of each particular researcher allowing fine-grained control over a wide range of settings (e.g. whether the replay of the video should pause automatically while an annotation is being created).

- Redesign of interface to improve learnability

- Organise recordings into groups and categories to make them easier to find and manage

- Ability to replay just the video clip from a search (at present plays from the start time and manually have to pause at the end time of the clip)

Output: Requirements/functional specifications will be expressed using use cases, personas and scenarios (developed further from the three scenarios outlined in section 2) and will be revisited in WP3 to ensure an agile approach. The different ALUIAR demonstrators themselves will also be used to support the ALUIAR co-design design and evaluation process through the analysis of recorded project evaluation interviews and observations in a fashion similar to that described in scenario 3 in section 2, thus demonstrating our confidence in our own software (an approach colloquially referred to as ‘eating your own dog food’).

Workpackage 2: Dissemination and working with and reporting to JISC

Output: Project website, Blog syndicated, Project plan, reports to and liaison with JISC, attendance at JISC programme level activities (e.g. programme meetings and relevant special interest groups) for at least 10 days per year, extensive involvement with JISC community, OSS Watch, workshops, relevant special interest groups, conferences presentations, mailing lists, published papers and articles.

Workpackage 3: Software Development & Evaluation

The software development and evaluation will be agile and iterative to ensure responsiveness to changes with three phases/iterations and demonstrators and a final version at the end of the project. Analysis and design and development of demonstrators at the different phases will involve usability studies including iterative evaluation of interaction design with users to finalise improved adaptability and learnability for deployment. Improvements in usability of the demonstrators will be measured and evaluated using quantitative and qualitative evaluations, tasks, tests and observations based on deployment by users on the stakeholder panel in their research studies. A wide range of approaches will be used at appropriate stages of pre-development, development, and deployment, including, low and hi fidelity prototypes, cognitive Walkthrough, Heuristic Evaluations, interviews, Adaptable and Learnable User Interface for Analysing Recordings (ALUIAR) Page 4, Adaptable and Learnable User Interface for Analysing Recordings (ALUIAR) Page 5, Focus Groups, and Empirical testing. The different ALUIAR demonstrators themselves will also be used to support the software design and evaluation process through the analysis of recorded ALUIAR project evaluation interviews and observations. Beta testing reports include testing against requirements and acceptance, compatibility, integration, unit and load testing and identifying problems to be addressed, including integration or interoperability issues. Demonstrators will be also made available to the JISC research community for evaluation and feedback including through stakeholders’ subject networks and contacts.

Outputs:

Phase 1 output will be a prototype demonstrator 1 based on requirements identified in 3.1

Phase 2 outputs will be an evaluation of demonstrator 1 and development of demonstrator 2 which will have improvements based on the phase 2 evaluation

Phase 3 outputs will be:

- An evaluation of demonstrator 2 and development of demonstrator 3 and finalisation of the release version of software

- Evaluation report of the use and acceptance of the software by researchers

- A detailed briefing paper that describes the approach taken and the lessons learned in terms of learnability and usability design

- Documentation with examples of use of the software by researchers

- A new improved interface to Synote e-research tool that significantly improves its learnability

Workpackage 4: Project Evaluation

Output: Evaluation report of project by independent evaluator using project documentation created throughout the project and with the success of the project measured by the acceptance and take up by users.

Workpackage 5: Sustainability We will consult with OSS watch on sustainability and access to the code and documentation through a best fit ‘OSI approved licence’ and a suitable governance model. ALUIAR will also liaise with the Software Sustainability Institute. Best practice in source code development and management will be followed and Quality factors built in to the workpackages will ensure successful Open Source life through achievement of a good OSMM rating, community engagement, and community stated need. All reports, tools, and code from the project will remain on the project server for a minimum period of 2 years and will be archived in the institutional repository (E-Prints) and appropriate JISC repository, for instance Jorum.

Output: Acceptance by users and a sustainability strategy for the application.